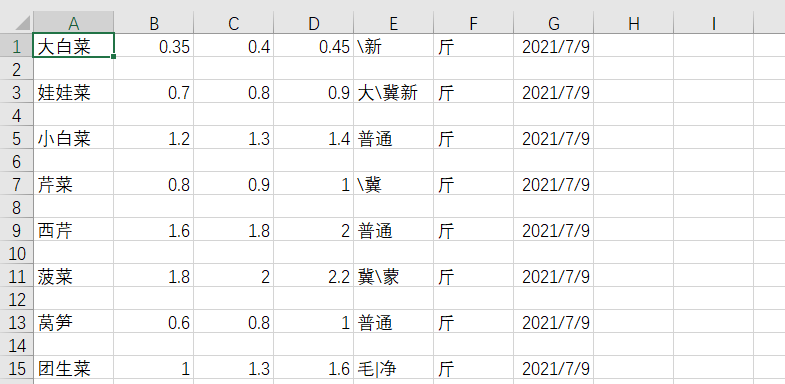

实战1—抓取菜价

从bs对象中查找数据:

find(标签,属性=值)find_all(标签,属性=值)

import requests

from bs4 import BeautifulSoup

import csv

url = "http://xinfadi.com.cn/marketanalysis/0/list/1.shtml"

resp = requests.get(url)

f = open("data/6.csv", mode="w")

csv_writer = csv.writer(f)

# 解析数据

# 1. 把页面源代码交给BeautifulSoup进行处理,生成bs对象

page = BeautifulSoup(resp.text, "html.parser") # 指定html解析器

# 2. 从bs对象中查找数据:

# table = page.find("table", class_="hq_table") # class是python的关键字

table = page.find("table", attrs={

"class": "hq_table" # 避免关键词冲突

})

# 拿到所有数据行

trs = table.find_all("tr")[1:]

for tr in trs:

tds = tr.find_all("td") # 每行中的所有td

name = tds[0].text # .text表示拿到呗标签标记的内容

low = tds[1].text

avg = tds[2].text

high = tds[3].text

gui = tds[4].text

kind = tds[5].text

date = tds[6].text

csv_writer.writerow([name, low, avg, high, gui, kind, date])

f.close()

resp.close()

实战2—抓取照片

-

拿到主页面的源代码,然后提取到子页面的链接地址

href -

通过

href拿到子页面的内容,从子页面中找到图片的下载地址 -

下载图片

import requests

from bs4 import BeautifulSoup

import time

url = "https://www.umei.net/bizhitupian/fengjingbizhi/"

url1 = "https://www.umei.net/"

resp = requests.get(url)

resp.encoding = 'utf-8'

# 把源代码交给bs

main_page = BeautifulSoup(resp.text, "html.parser")

alist = main_page.find("div", class_="TypeList").find_all("a")

for a in alist:

href = a.get('href') # 直接通过get就可以拿到属性

# 拿到子页面的源代码

child_href = url1 + href.strip("/")

child_page_resp = requests.get(child_href)

child_page_resp.encoding = 'utf-8'

# 从子页面中拿到图片链接

child_page = BeautifulSoup(child_page_resp.text, "html.parser")

p = child_page.find("p", align="center")

img = p.find("img")

src = img.get("src")

# 下载图片

img_resp = requests.get(src)

# img_resp.content 这里取得是字节

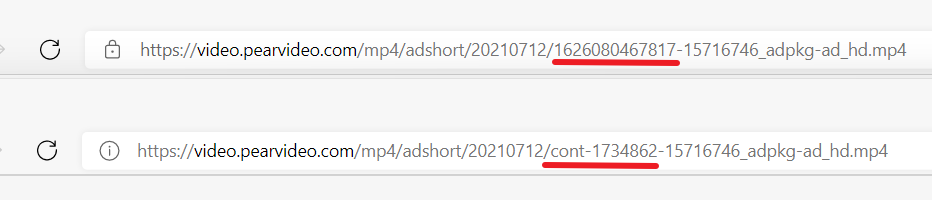

img_name = src.split("/")[-1] # 拿到url中最后一个/以后的内容

with open("img/" + img_name, mode="wb") as f:

f.write(img_resp.content) # 图片内容写入到文件

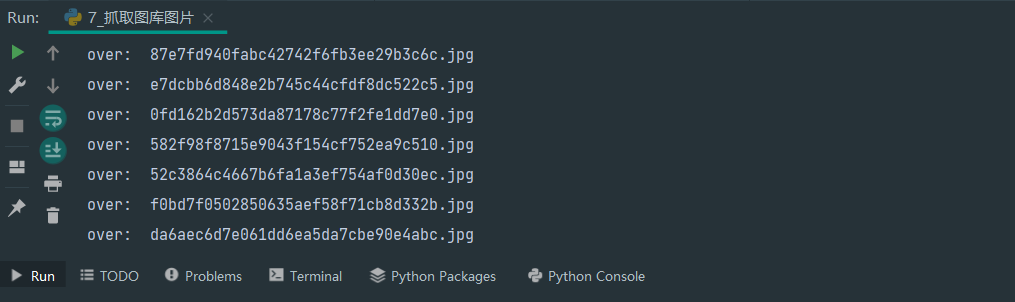

print("over: ", img_name)

time.sleep(1) # 反爬虫

resp.close()